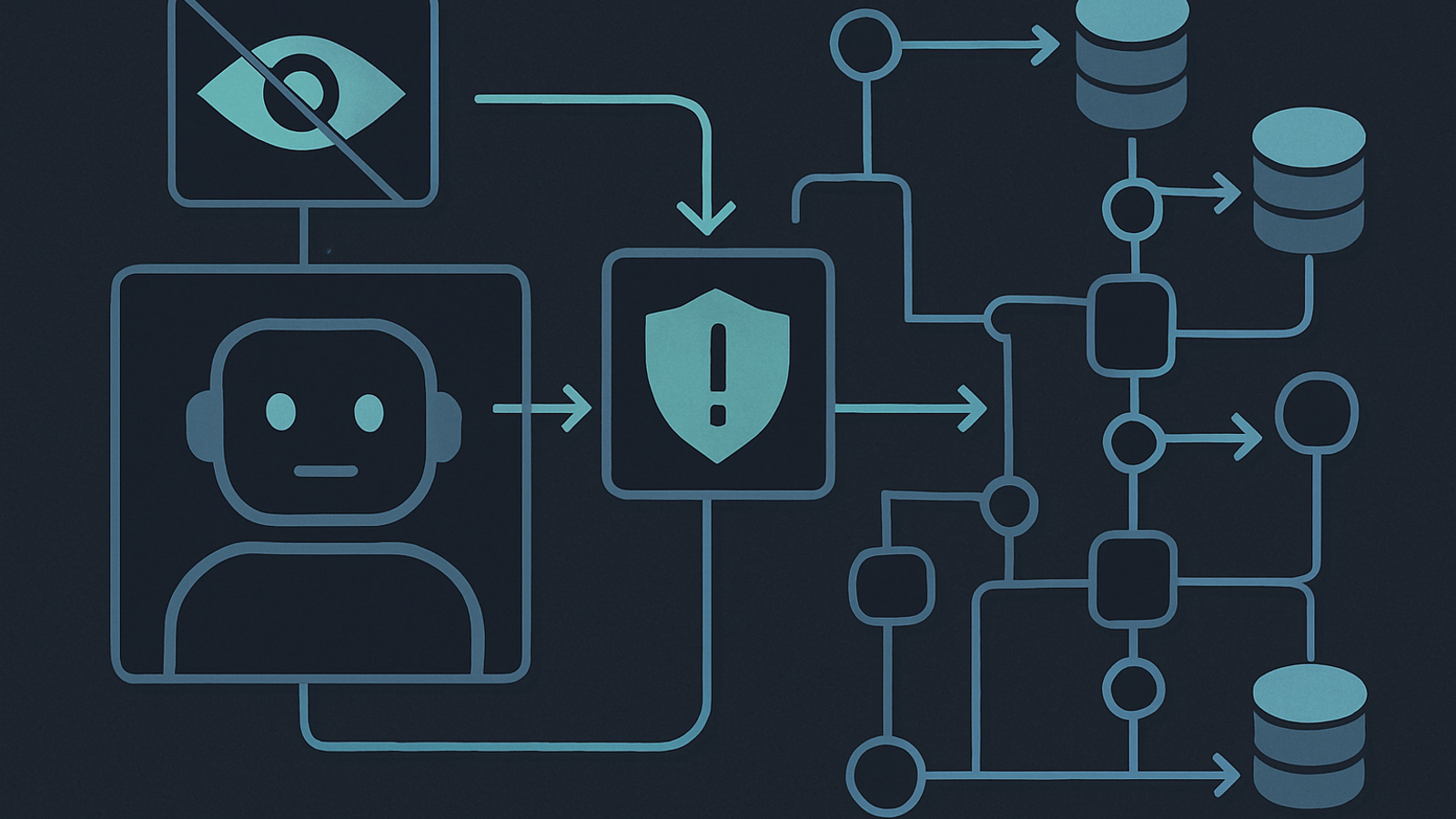

If your AI assistant can “see” more than a user should, it will eventually show something it shouldn’t. The fix is not a bigger model; it’s a retrieval layer that mirrors your existing permissions, keeps them fresh, and gives product teams clear guardrails. This practical 14‑day plan will help UK SMEs and charities make AI search and copilots respect access control in Microsoft 365 and Google Workspace, while improving answer quality and keeping costs predictable.

Good news: major platforms already support permission‑aware retrieval. Microsoft 365 Copilot enforces Microsoft Entra permissions and sensitivity labels at the point of content access; prompts and responses are kept within the Microsoft 365 service boundary and are not used to train foundation models. That means your governance needs to be right in SharePoint, OneDrive, Teams and connected sources, because Copilot honours what’s already there. learn.microsoft.com

SharePoint search performs “security trimming” (users only see items they can access). If you integrate external content via Microsoft Graph connectors, you must validate each connector’s access settings to avoid “Visible to everyone” mistakes. learn.microsoft.com

Before you start: a 10‑minute diagnostic

- Can you name the 3 content sources your AI relies on most (for example HR policies in SharePoint, client folders in OneDrive, supplier docs in Google Drive)?

- Do new hires and leavers automatically gain/lose access to those sources within 24 hours?

- Have you checked each Microsoft 365/Graph connector’s visibility setting this quarter? learn.microsoft.com

- When a team site’s permissions change, how quickly do those changes reflect in your search or copilot results?

- Do you have an agreed list of “sensitive collections” that must never be exposed via AI search (for example, board minutes, HR cases)?

- Are titles, summaries and headings written in plain English so the right content is retrieved first time? gov.uk

- Can you see who asked what, which sources were used, and which documents were cited?

- Do you track the percentage of answers that include a link to the authoritative source?

- Do you have a clear “pull the plug” switch for connectors or copilot access?

- Do you run periodic ROT (redundant, outdated, trivial) clean‑ups to reduce noise? gov.uk

Your 14‑day, permission‑aware retrieval plan

Days 1–2: choose the scope and assemble owners

- Pick one high‑value use case (for example, “HR policy answers for managers”). Define the audience, sources and what “good” looks like.

- Nominate a data owner per source (HR, Finance, Legal, Operations). Book 30‑minute slots with each.

Day 3: map permissions and connectors

- List all connectors in Microsoft 365 Copilot or Microsoft Search. For each, record visibility (“Only people with access” vs “Everyone”). If any show “Everyone”, delete and recreate with the correct setting. learn.microsoft.com

- Confirm that Copilot and SharePoint already enforce user permissions and labels. Your goal is to strengthen the source security model, not add a parallel one. learn.microsoft.com

Day 4: eliminate quick oversharing risks

- Audit the chosen sites/libraries for “Everyone” or broadly shared links and remove them where inappropriate.

- Group‑based access beats individual access. Move one‑off user permissions into well‑named groups to simplify future changes. This also reduces churn in permission metadata for indexing services. learn.microsoft.com

Day 5: fix titles, summaries and headings for retrieval

- Front‑load titles with the user task (“Expenses: how to claim mileage”), add a 1‑2 sentence summary, and use meaningful headings. These small edits boost findability for both humans and AI. gov.uk

Day 6: set your freshness policy

- Define how fast permission changes must flow through to answers (for example, within 24 hours).

- If you use SharePoint or file shares with crawls, note that some changes require a full crawl to pick up new properties or security. Bake this into your schedule. learn.microsoft.com

- If you use Azure AI Search to index external sources, understand how ACLs are ingested and refreshed; some preview features need explicit resyncs to pick up permission changes. learn.microsoft.com

Day 7: build a small evaluation set

- Collect 25 real questions from managers and frontline staff. For each, note the expected source document and a sample “gold” answer. You’ll reuse this in routine checks.

Day 8: run permission‑aware tests

- Test with three personas: a manager, a regular staff member, and a contractor. Each asks the 25 questions. Record any answer that cites a source they should not access.

- Where an answer is blank for a user who should have access, verify the connector ACL and the source permissions first, not the model. learn.microsoft.com

Day 9: enable minimal observability

- Turn on logging for: question text, which sources were queried, which documents were returned, and the final citations. You’re aiming for traceability, not surveillance.

Day 10: overshare drill

- Ask your red team (or two curious colleagues) to try to retrieve board papers or HR cases via the copilot. If they can, raise a priority incident, revoke the connector or site, and document the gap.

Day 11: remove noise (ROT) and duplicates

- Delete or archive content that is redundant, outdated or trivial to reduce irrelevant hits and speed up indexing. The Cabinet Office has used an automated ROT process to retire millions of legacy files; take inspiration from their approach and apply human checks for your context. gov.uk

Day 12: enrich the top 50 documents

- Add clear summaries and consistent headings. Keep language plain and task‑oriented to improve retrieval relevance and comprehension. gov.uk

Day 13: procurement checkpoint

- If you plan to add a search service or connector, validate it supports item‑level ACLs and group mapping, and check how permission changes propagate. Some platforms enforce ACLs at query time using the user’s token, which is ideal; others require periodic permission re‑ingest. learn.microsoft.com

Day 14: go/no‑go and handover

- Share results, residual risks, and a simple runbook for new sites, leavers/joiners, and connector changes. Align with the UK government’s AI cyber security code of practice principles on asset inventory and access control. gov.uk

What “good” looks like: KPIs you can track next week

| KPI | Target | Why it matters |

|---|---|---|

| Permission freshness | ≤ 24 hours from source change to reflected answer | Reduces risk that yesterday’s leaver still sees sensitive content today. Consider crawl and resync limits. learn.microsoft.com |

| Answer coverage (top tasks) | ≥ 80% of top 25 queries return a sourced answer | Shows your core content is findable and up to date. |

| Source‑linked answers | ≥ 95% include at least one clickable citation | Builds trust and allows rapid verification. |

| Overshare rate | 0 incidents in weekly tests | Non‑negotiable for reputation and safety. |

| ROT reduction | −20% of redundant/outdated files in the pilot scope | Less noise, faster indexing and better relevance. gov.uk |

Procurement questions to put in writing

Use these when buying a new enterprise search tool, a connector, or a vendor‑hosted copilot.

- Access model: Does the system enforce item‑level ACLs at query time using the user’s identity token? How are external groups mapped? learn.microsoft.com

- Permission sync: When SharePoint or Drive permissions change, how and when do you pick them up? Do you support targeted resyncs for ACLs without a full re‑index? learn.microsoft.com

- Connector visibility: Can admins verify that each connector is set to “Only people with access” rather than “Everyone”? learn.microsoft.com

- Security trimming: Do you preserve source ACLs for built‑in connectors (for example NTFS or SharePoint)? practical365.com

- Content quality: Do you expose per‑document titles/summaries so editors can improve findability without developer work? Use plain English guidance. gov.uk

- Auditability: Can we see which documents influenced an answer and who asked the question?

- Kill switch: Can we immediately disable a connector or source without deleting configuration?

- Standards: How does the product align with the UK AI cyber security code of practice for asset inventory and access control? gov.uk

Common pitfalls and how to avoid them

- Assuming “the AI leaked it.” In most incidents the AI faithfully surfaced content that was already over‑shared at source. Fix source permissions first; Copilot respects your existing controls. learn.microsoft.com

- Stale permission metadata in custom indexes. Some indexers won’t pick up ACL changes unless you trigger a resync or re‑ingest. Plan for it. learn.microsoft.com

- Unclear titles and headings. Poorly written documents waste tokens and time, hurting relevance. Apply GOV.UK content design basics. gov.uk

Risk and cost checklist

| Risk | Early warning | Low‑cost mitigation |

|---|---|---|

| Oversharing via legacy links | Search shows confidential docs for general users | Remove “Everyone” shares; verify each connector’s visibility; re‑run persona tests weekly. learn.microsoft.com |

| Stale permissions in index | Leaver still sees content in AI results | Set a 24‑hour freshness SLO; schedule ACL resyncs after bulk changes. learn.microsoft.com |

| Noisy, outdated content | Answers cite obsolete docs | Quarterly ROT clean‑up for high‑impact sites; enrich top 50 docs with clear summaries. gov.uk |

| Shadow connectors | Duplicate sources and inconsistent ACLs | Maintain an asset inventory and supplier controls per the UK AI cyber security code of practice. gov.uk |

Where this fits with your wider AI roadmap

Permission‑aware retrieval is one piece of a reliable AI stack. Pair it with lightweight observability and post‑incident learning to sustain trust and performance. For a rapid, blameless approach, see our template: The 60‑minute AI incident review. If you’re improving content quality, combine today’s plan with Chunk once, answer everywhere and our 30‑day AI observability sprint. Teams rolling out a broader knowledge layer can also read the hybrid retrieval playbook: From SharePoint to solid answers.

Final word

The safest, most useful AI assistants don’t see anything new; they simply reflect the access users already have, and they do it quickly and clearly. If you follow this plan, you will cut the risk of accidental oversharing, improve answer quality, and give your leadership confidence to scale AI in 2026.