If your AI pilot sometimes “guesses”, struggles with acronyms, or surfaces out‑of‑date pages, the culprit is usually retrieval—not the model. The good news: you can stabilise retrieval quality in two weeks by tightening three foundations: the way you split content into chunks, the metadata you attach, and the search pattern you use.

This article gives non‑technical leaders a 14‑day plan to deliver predictable, measurable gains without a rewrite. It assumes you already have a basic Retrieval‑Augmented Generation (RAG) pilot and want to improve accuracy, latency and cost before scaling.

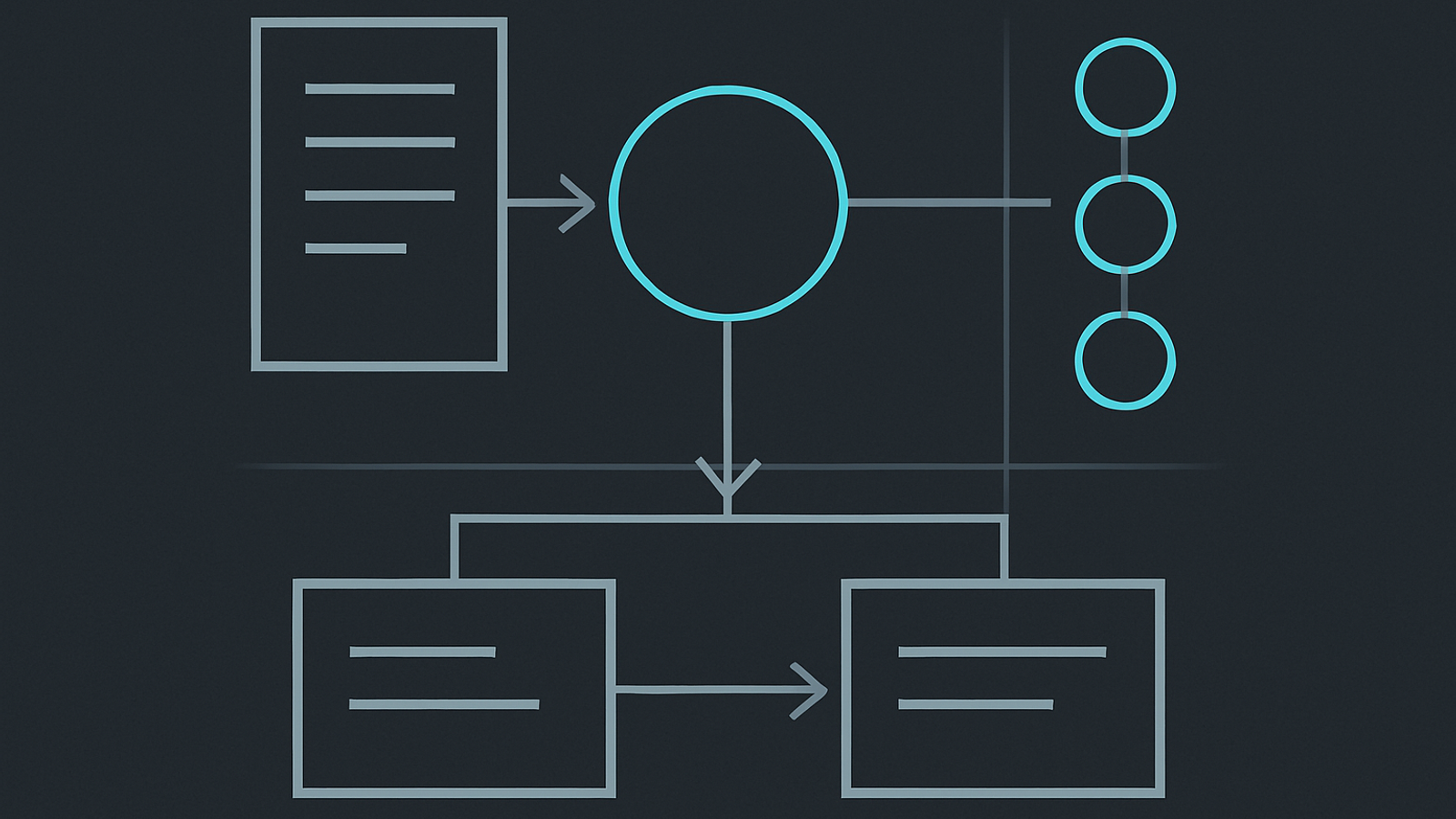

What “retrieval foundations” actually means

- Chunking: how you slice documents into retrievable passages. Bad chunking hides the one paragraph that answers the question. Emerging research shows chunking strategy materially affects RAG quality. See examples of new approaches in recent papers exploring hierarchical and chunk‑free methods. HiChunk and CFIC.

- Metadata: labels that help you filter, rank and trust results (e.g. source, date, owner, region, product, permission). UK public sector guidance recommends simple, standardised metadata for findability; DCAT and Dublin Core are sensible, lightweight choices. DCAT on GOV.UK; Dublin Core.

- Hybrid search: combine keyword (BM25) and semantic (vector) search so you match exact terms and meaning. It’s now standard in mature tools. Weaviate, Pinecone, and Elastic all support it. If you’ve never heard of BM25, it’s the classic ranking used by search engines. BM25 explained.

Symptoms and likely causes

| Symptom | Likely retrieval cause | Quick test |

|---|---|---|

| Answers cite the wrong policy version | No “effective date” metadata; ranking not preferring newest | Filter by date; compare groundedness/faithfulness scores |

| Fails on acronyms and part numbers | Vector‑only retrieval; no keyword fallback | Run the same query with hybrid alpha tilted to keywords |

| Hallucinations despite “context provided” | Chunks too long, noisy or off‑topic | Measure context precision and recall on 50 real queries |

| Slow answers during peaks | Too many chunks re‑ranked; no max‑context cap | Cap retrieved items to k=10 and add a re‑ranker budget |

| Costs drift upward | Oversized chunks and re‑ranking everything | Track average tokens per answer and re‑rank hit‑rate |

The 14‑day plan (no code, measurable outcomes)

Days 1–3: Get real questions and define success

- Collect 50–100 real queries from tickets, email and search logs. Tag 10 “must‑win” ones (board‑level topics, compliance‑sensitive, or high volume).

- Create a tiny ground‑truth set: for each query, record the correct page or paragraph.

- Choose evaluation metrics you’ll re‑use weekly:

- Recall@k and nDCG@k for retrieval quality. RAG metrics overview.

- Faithfulness/groundedness to check the answer is supported by context. Ragas metrics.

- Latency p95 (target under 2–3s for internal use) and cost per answer (tokens, storage, queries).

- Set targets for a go/no‑go at Day 14, e.g. Recall@10 ≥ 0.75, Faithfulness ≥ 0.9, p95 ≤ 3s, cost ≤ £0.03/answer.

Days 4–6: Fix chunking and add “zero‑regret” metadata

You don’t need a data catalogue project. Add a few well‑chosen fields and you’ll unlock filtering, ranking, and trust.

- Chunking: start with short, structure‑aware chunks (~200–400 words) with light overlap if the source is narrative. Keep tables and bullet lists intact. If your content is highly structured (policies, contracts), prefer section‑based chunks (headings as boundaries). Research shows chunking strategy is material; don’t over‑optimise on day one. HiChunk; CFIC.

- Must‑have metadata (keep it light):

- title, description, creator/owner, publisher/department (Dublin Core)

- issued_date, modified_date, version

- document_type (policy, contract, SOP, FAQ, spec)

- product/service, region, audience (e.g. Finance, Field Ops)

- permission or visibility flag (public, staff, restricted)

- Trust fields: add “effective_from” and “superseded_by” so ranking can prefer current versions.

- Privacy quick‑check: avoid storing unnecessary personal data in chunks. If you must process logs or content with personal data, follow anonymisation and pseudonymisation good practice. ICO anonymisation guidance.

Days 7–9: Turn on hybrid search (keyword + vector)

- Default to hybrid. Run keyword (BM25) and vector searches in parallel and blend results (many tools expose an alpha parameter to weight semantic vs keyword relevance). How fusion works; Pinecone hybrid index.

- Set sensible defaults: alpha ~0.5 for general use; increase toward 0.8 for vague queries (“How do we claim expenses?”) and lower toward 0.2 for exact queries (“Form F-112”).

- Blend robustly: if your platform supports Reciprocal Rank Fusion (RRF), it’s a strong, simple rank‑combining method. RRF paper.

- Filters first: use metadata filters (product, region, version) before re‑ranking to reduce cost and noise.

Days 10–12: Re‑rank, de‑noise and budget latency

- Re‑rank the top 50 → 10. Use a cross‑encoder or similar to promote passages that truly answer the query. Cap total context tokens to protect latency and cost.

- Freshness bias: when two items tie, prefer the newest “effective_from” date unless the query specifies a version.

- Exact‑term fallbacks: for acronyms, SKUs and clauses, add a rule to include any exact keyword hit even if vector distance is low.

- Guardrails: if faithfulness drops below 0.8, return a clarifying question or a link rather than a confident answer.

Days 13–14: Evaluate, decide, document

- Run your 50–100 queries and record metrics:

- Retrieval: Recall@10 and nDCG@10

- Answer: Faithfulness/groundedness, Answer Relevance

- Ops: p95 latency, average tokens per answer, cost per answer

- Compare to Day‑1 targets. If you miss one threshold, adjust alpha, k, or filters and re‑run only the missed queries.

- Write a one‑page retrieval profile: chunk rules, metadata fields, hybrid settings, re‑ranker cap, acceptance thresholds and a rollback plan.

What to ask vendors this week

When you speak to search/vector database vendors or platform teams, use these questions to avoid surprises:

- Hybrid search: do you support parallel BM25 + vector search and configurable fusion? Can we adjust per query? (example)

- Re‑ranking: built‑in cross‑encoders or connect our own? What’s the typical p95 overhead at k=50?

- Metadata: can we index and filter on 5–10 fields, including dates and permissions, without full re‑ingest?

- Updates: can we upsert a single chunk without recalculating the whole document?

- Limits: maximum vector dimensions, index size, and query concurrency before performance degrades.

- Observability: per‑query diagnostics (scores, filters applied, versions), and export of signals for evaluation weekly.

- Pricing: how are storage, queries, re‑ranking and egress billed? Any auto‑scaling controls to cap spend?

For a deeper procurement view, see our buyer playbooks on AI contracts and how to avoid nine common procurement traps.

KPIs you can track from Monday

- Quality: Recall@10, nDCG@10, Faithfulness (weekly trend; target ≥0.9).

- Efficiency: p95 latency (≤3s internal), average chunks re‑ranked per query (target ≤10), tokens per answer.

- Cost: cost per answer (model + infra), storage cost per 1k chunks, re‑ranking hit‑rate.

- Adoption: top 10 queries coverage, satisfaction score on “Did this answer your question?” prompt.

- Trust: % answers citing latest effective version; % answers with inline citations.

Risk and cost guardrails

| Risk | Mitigation | Owner | Budget guardrail |

|---|---|---|---|

| Hallucination on sensitive topics | Faithfulness gate; require citation; fall back to snippets | Product owner | Reject any release with Faithfulness < 0.85 |

| Costs creep up with re‑ranking | Cap k to 50, re‑rank to 10; track tokens/query | Engineering lead | £0.03 max per internal answer |

| Out‑of‑date answers | Effective dates in metadata; freshness bias | Content owner | ≥95% answers cite current version |

| PII leakage in chunks | Redact before indexing; use pseudonymisation where needed | DPO | Zero unmasked PII in sampled chunks |

If you need a fuller rollout plan beyond two weeks, see our 6‑week RAG blueprint and the 10 tests that predict AI quality.

Practical defaults (you can change later)

- Chunk size: 200–400 words; align to headings and lists; small overlap only where continuity matters.

- Metadata fields: title, description, owner, issued_date, modified_date, effective_from, document_type, product/service, region, audience, permission.

- Hybrid search: start at alpha=0.5; store both dense vectors and support keyword BM25; enable filters for document_type, product, region and effective_from.

- Re‑ranker budget: 50 candidates → 10 context passages; max 1,000–1,500 context tokens into the model.

- Evaluation cadence: weekly 30‑minute review of metrics and three failed queries.

Why hybrid won’t blow up your latency

Modern engines run keyword and vector lookups in parallel and merge rankings. You’re adding tens of milliseconds, not seconds, in return for big gains on exact‑term queries. Documentation from Weaviate and Elastic describes built‑in fusion methods; Pinecone provides sparse‑dense hybrid indexes with tunable weighting. Weaviate hybrid, Elastic 8.9 hybrid, Pinecone hybrid.

Governance without red tape

You don’t need a new policy to label documents consistently. Borrow a handful of metadata elements from UK public sector practice so your search can filter and rank sensibly today—and so you can plug into catalogues later if needed. Start with DCAT/Dublin Core fields and add business‑specific tags. Metadata standards collection.

For privacy, keep personal data out of chunks by default. Where you process personal information for internal analytics or logs, the ICO guidance explains when anonymisation or pseudonymisation is appropriate and how to reduce re‑identification risk. ICO on pseudonymisation.

Executive checklist

- We have 50–100 real queries with ground truth.

- Chunking is short, structure‑aware and consistent.

- Metadata includes owner, dates, version, type, product, region, audience, permission.

- Hybrid search is on, with per‑query weighting.

- We filter by metadata before re‑ranking.

- We track Recall@10, Faithfulness, p95 latency, tokens and cost per answer every week.

- We have a rollback if metrics dip (return links/snippets, not confident answers).